[

woodworking

fitness

maker

diy

covid

]

23 Aug 2020

Project Difficulty

- 8/10 (Not for the faint of heart)

After COVID struck and gyms shut down it became pretty clear that I’d need something better than my flimsy piano bench to use as a workout bench.

There was an influx of DIY versions of home workout equipment being advertised on instagram and youtube but nothing quite met all my requirements.

This design represents a lot of thinking and planning and takes inspiration from some of the best other DIY designs I could find online. I’ve posted some of my favorite other benches below.

Project Requirements

As usual, I started with a list of project requirements and constraints.

- Sturdy, functional workout bench that can support my weight

- Has an adjustable back for incline and seated exercises

- Stores fitness equipment easily out of view

- Mobile enough for moving in and out of the way

- Looks nice sitting out

Supplies

- 3/4” Plywood (I used Baltic Birch)

- Standard 2x4s

- Standard 2x2s

- #8 Threaded Rod

- #8 washers and nuts

- Various Wood Screws

- Heavy Duty Furniture Casters

- 1/4” Thick Board for cushions

- 1” Foam Badding

- 2 yards of heavy-duty vinyl / faux leather

- Water-Based Polyurethane (or whatever finish you want)

Required Equipment

- Circular Saw / Table Saw

- Track Saw Guide

- Pocket Screw Jig

- Jigsaw

- Drill

- Hack Saw

- Sandpaper

- Measuring Tape

- Level

- File

- Heavy Duty Stapler

- Various Clamps

Cutlist

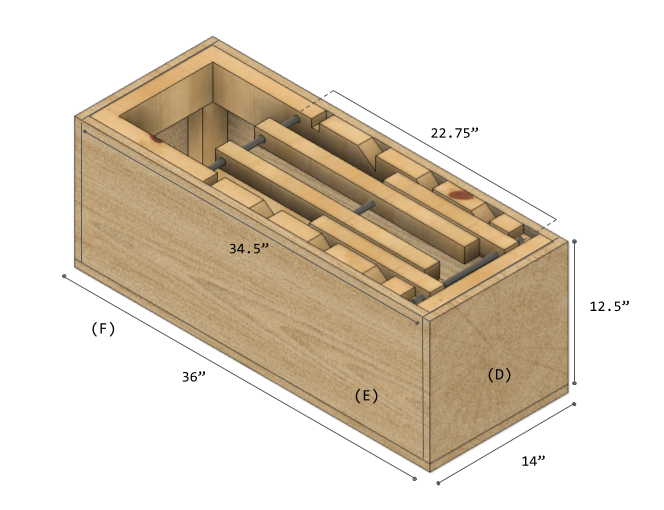

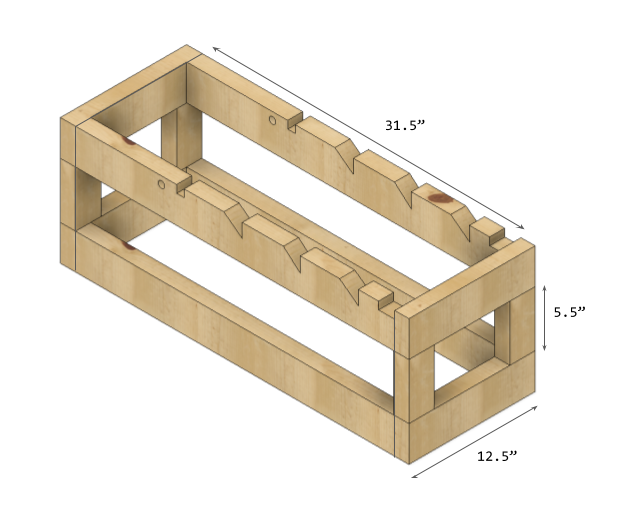

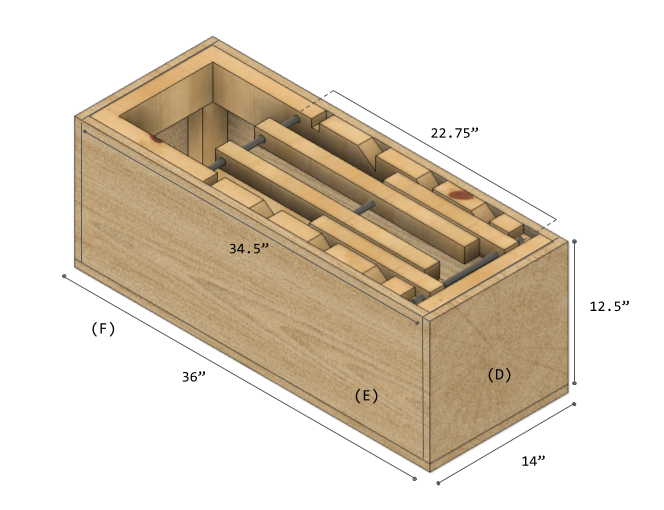

Main Structure

- Four (4) 31.5” 2x4s (A)

- Four (4) 12.5” 2x4s (B)

- Four (4) 5.5” 2x4s (C)

- Two (2) 12.5” x 14” 3/4” Baltic Birch (D)

- Two (2) 12.5” x 34.5” 3/4” Baltic Birch (E)

- One (1) 14” x 36” 3/4” Baltic Birch (F)

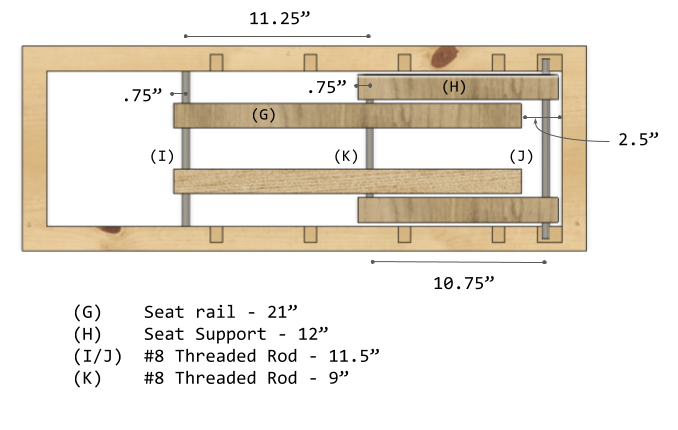

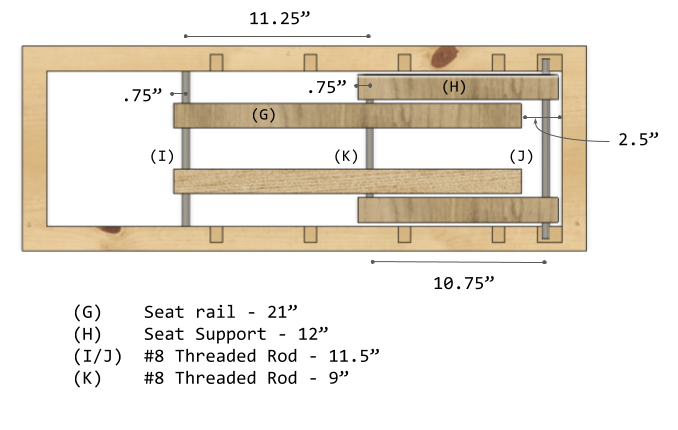

Adjustable Assembly

- Two (2) 21” 2x2 (G)

- Two (2) 12” 2x2 (H)

- Two (2) 11.5” #8 Threaded Steel Rod (I/J)

- One (1) 9” #8 Threaded Steel Rod (K)

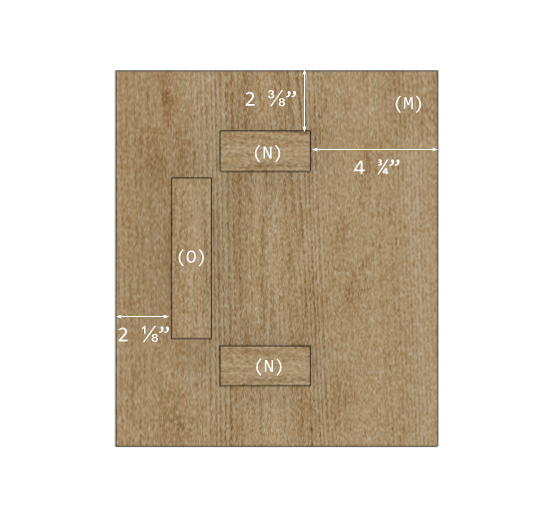

Seat

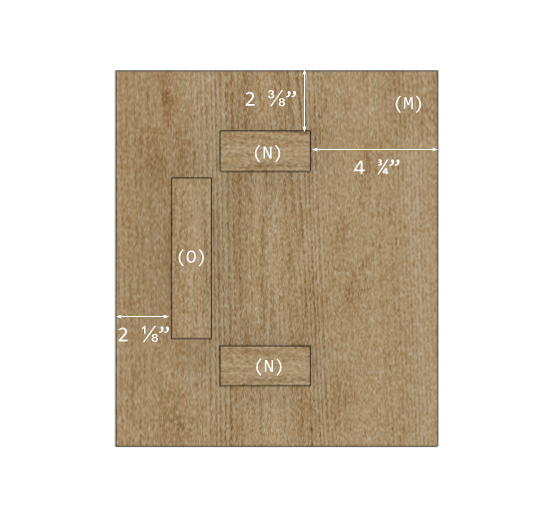

- One (1) 14” x 23” 3/4” Baltic Birch (L)

- One (1) 14” x 12” 3/4” Baltic Birch (M)

- Two (2) 1.5” x 4” 3/4” Baltic Birch (N)

- One (1) 1.5” x 6” 3/4” Baltic Birch (O)

Design

The thing that I found lacking with the other DIY home workout benches is that they either had storage or were adjustable.

None of them did both. This bench could much more easily be made as just a flat bench with storage. The final dimensions of this

bench is supposed to be 3ft long by 14” wide and roughly 18” high (with the cushion). The bench is designed with casters (not shown) so it can easily be rolled in and out of the way.

Steps

-

First step is to build the inner frame that will provide the structure to the bench. Cut pieces A, B and C to their proper lengths, as specified in the cutlist above.

-

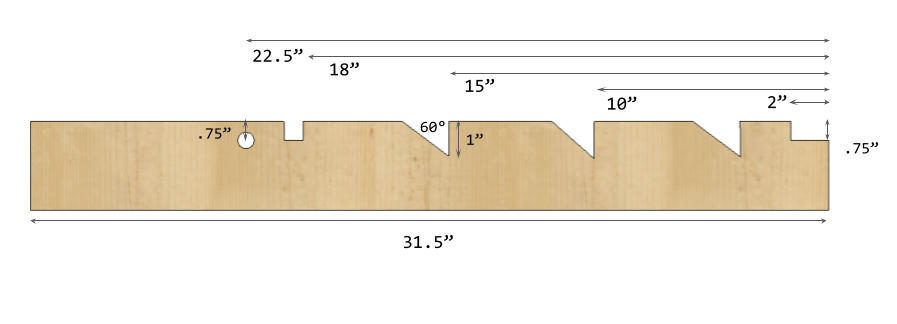

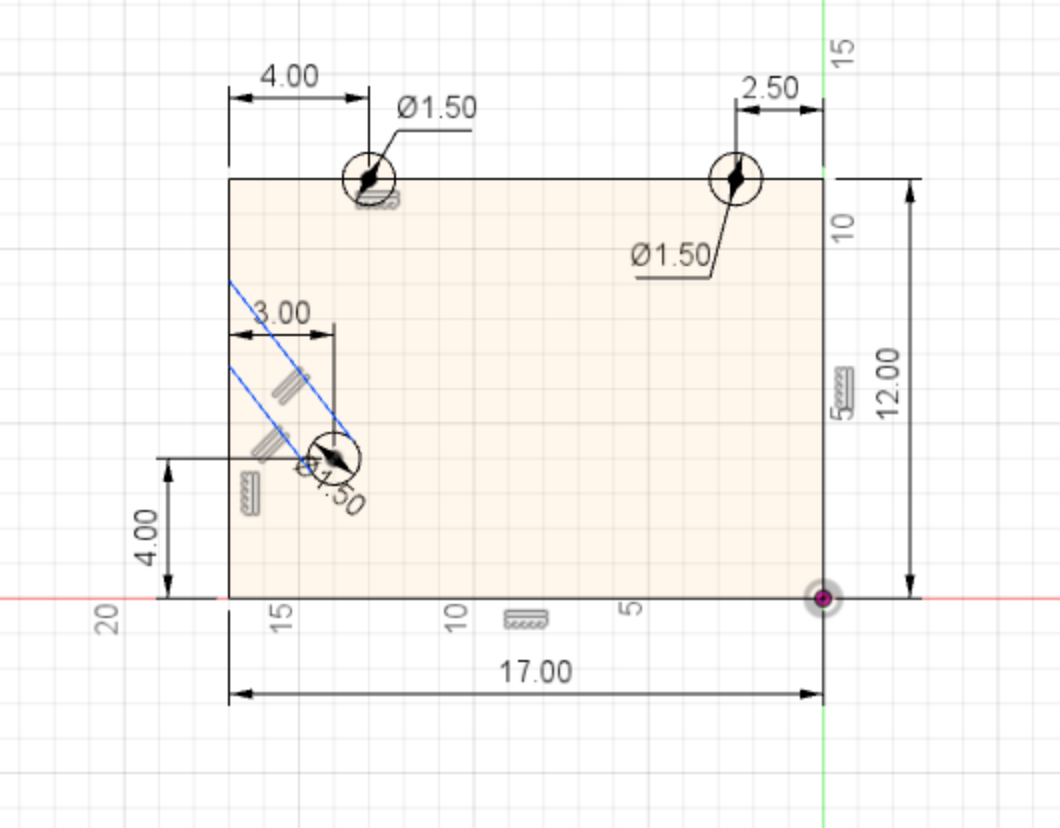

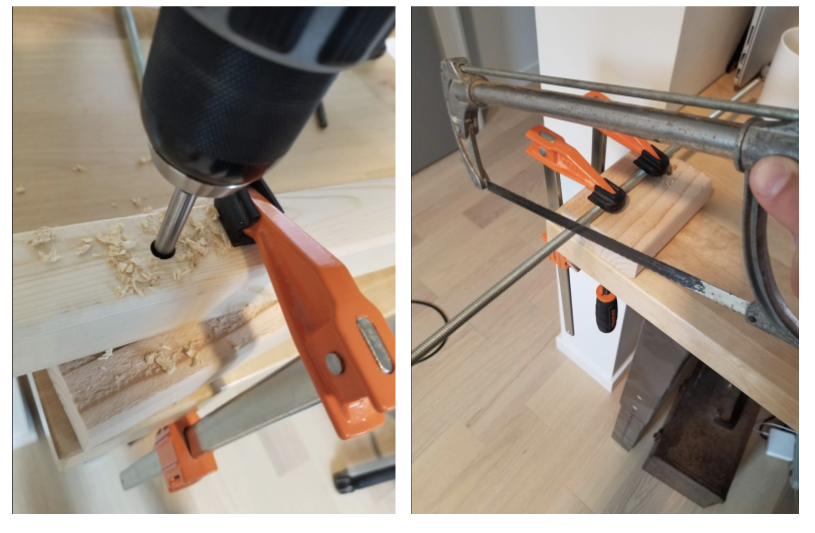

Next take two of the (A) pieces. These will have additional details added to it to make the adjustment assembly work. Use a 3/8” drill bit to drill the holes through both A pieces. Then use a jigsaw to cut out the notches and triangles as shown below. These

are up to you where they go. They determine what angles are available for the seat to adjust to. Make sure that for the second adjustment piece you do is the mirror image of what’s shown here.

-

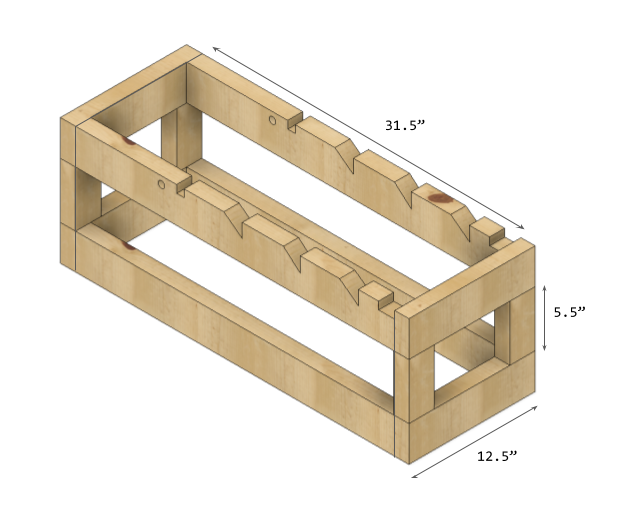

Now assemble the frame 2x4s as shown below. I used pocket screws to put everything together, but normal wood screws through the faces would work here as well.

The frame won’t be visible from the outside so it doesn’t need to be nice wood. You will use pieces A, B and C for this step.

-

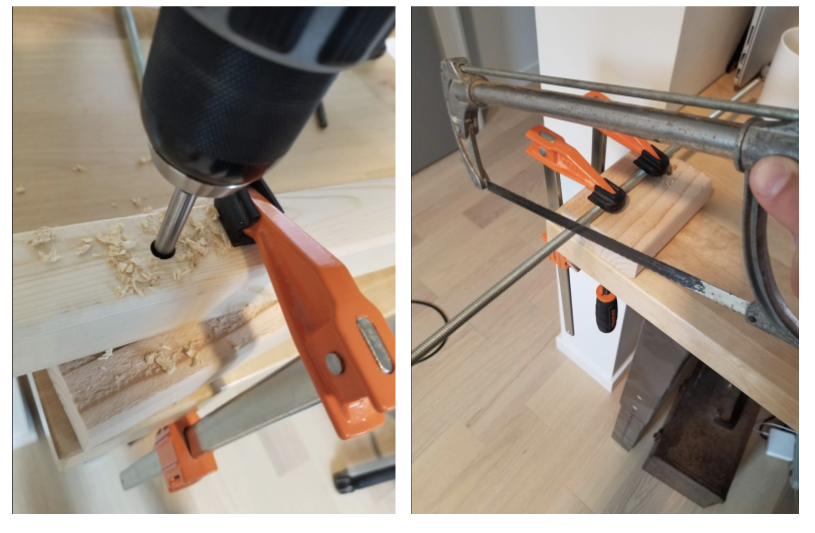

We’ll build the seat adjustment assembly next. Drill holes large enough for the #8 threaded rod to go through

.75” in from the end of both G pieces and both ends of the H pieces. Make sure this hole goes through exactly perpendicular to all the the 2x2 faces. If you have a drill press, that would be the best.

-

Cut the threaded rod using a hacksaw into 3 pieces, I, J and K. You’ll likely want to file the edges down to remove any steel burrs.

-

Next drill the same diameter hole 11.25” up from the center of the first hole on both the G pieces.

This will act as the fulcrum point for the support arm pieces (H) to attach.

-

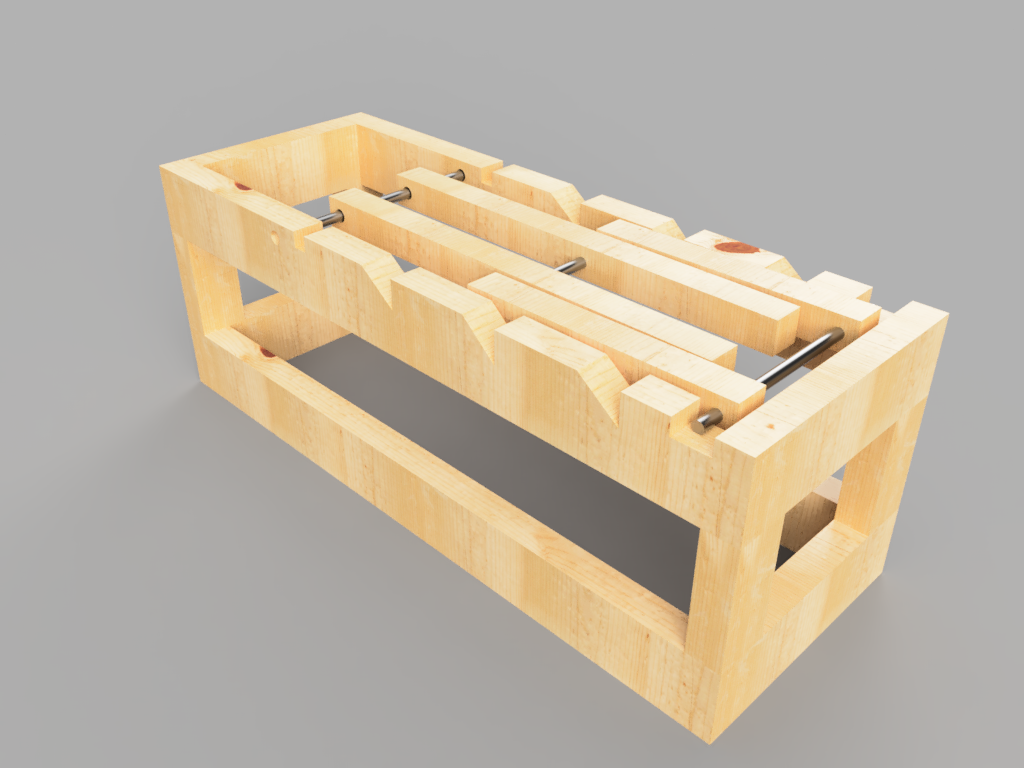

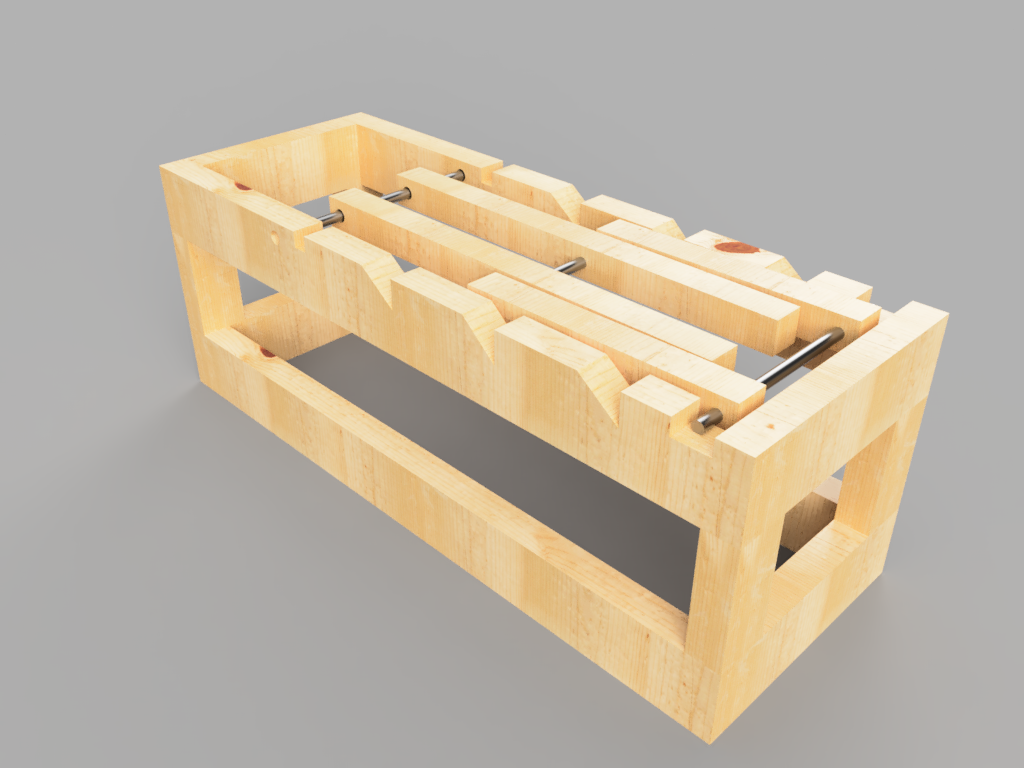

Assemble the adjustment assembly with the 2x4 frame carefully using washers and nuts on either side of every place where the 2x2s meet threaded rod. The threaded nuts will help keep the 2x2s all in alignment and allow everything to rotate freely. The final assembly should look similar to the rendering below.

-

Now that the inner workings are all built we’ll move on to cladding the frame with the outer walls. Cut and attach the outer walls (Pieces D, E and F) using a combination of pocket screws in the corners and screws between the baltic birch and the inner frame. Make sure to hide the pocket screws in the interior.

-

Attach some heavy duty casters

to the bottom of the base. In the renderings I’m showing feet but I’d always planned to use locking casters. Make sure to take into account

the height of the casters for the final height. The ones for this design were 2.5” inches tall and the entire design takes that into consideration to reach the final height of 18”.

-

Cut the seat back (L) to size and attach this to the seat rails (G) using long wood screws. Make sure to align the top of the seat back with the outer edge of the bench walls so they are coincident. It took some finagling with clamps to get everything lined up before driving in the screws.

-

For the seat portion to work properly it has to be able to slide back and forth, allowing the adustable back of the seat to move freely. I also wanted the seat to be easily removable. This was solved by attach 3 small pieces of wood (N/O) to the bottom of the seat (M). The pieces serve as guide rails for the sliding and a stopper so the seat doesn’t slide too far out of back.

-

Now that all the wood components are built it’s time to sand it all down. You’ll likely want to sand down any sharp corners especially well to reduce the chance of injuring yourself when you’re actually using the bench for working out.

-

Apply a finish everywhere that’s visible. Let it dry, sand once again and apply more finish. Do this until you’re satisfied with the level of finish.

-

We’re almost at the end. It’s time to make the cushions. To do this, take the thin 1/4” boards which should be cut to the dimensions of the seat top and the seat back. Cut the 1” foam badding to same dimensions. You’re going to want to glue the foam badding down to the board somehow. I used wood glue, which worked ok but anyway you attach them should work.

-

Cut your upholstery material about 3 to 4 inches larger than the back and seat boards in every direction. Lay the material face down, then lay the boards and foam padding on top so that the boards are facing out. Pulling as taut as you can, staple the material into the boards. Think of wrapping a present when you do this so you can get nice corners. I used LOTS of staples.

-

Finally, attach the cushions to the seat bottom and seat back using some short wood screws through the back of the boards. In this way the cushions should be firmly attached but the screws won’t be visible.

There you have it

I know this was a hard one but I’m really happy with how it came out. It checks all my boxes and I think it looks great. If anyone out there has or is looking to do something like this I’d love to know. If you think I’m a bit crazy or could have accomplished this without all this work please let me know.

Other Makers Links

[

woodworking

fitness

maker

tinyhouse

diy

]

30 Dec 2019

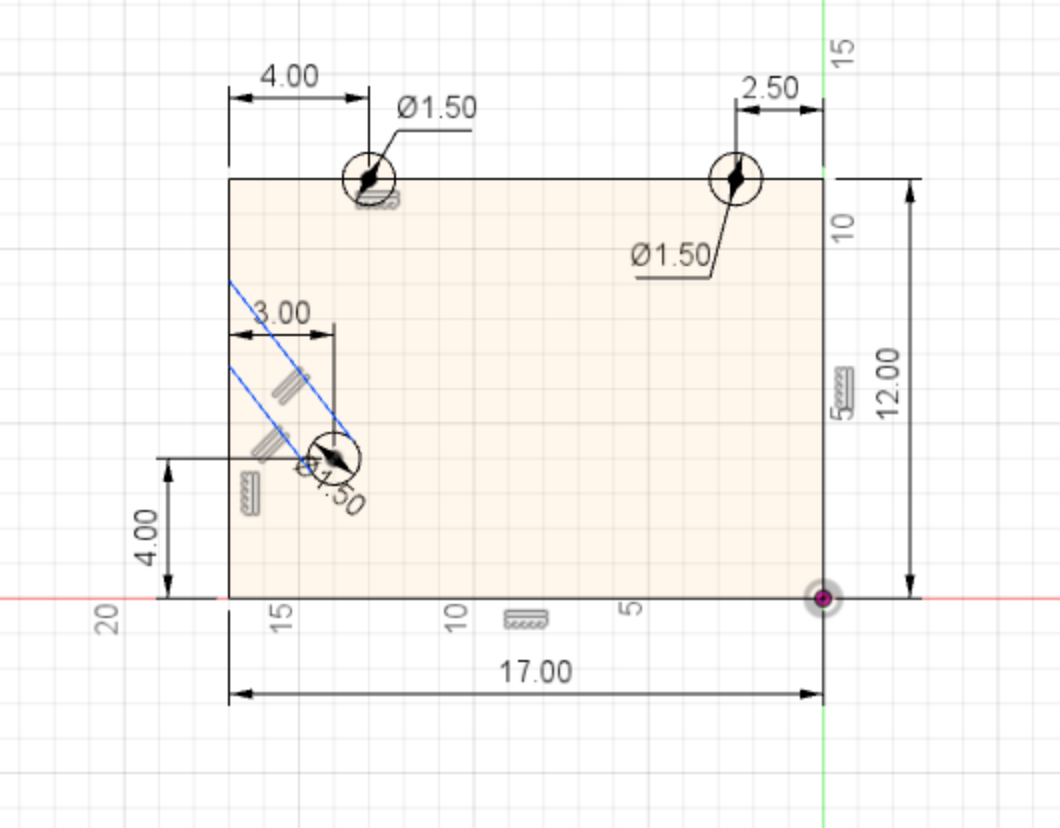

So I wanted a pull-up bar in my house but I’ve always hated those doorframe pull-up bars you get at Target. They are too short for me to hang from and not have my feet dragging on the ground. They don’t feel very sturdy to me and they can damage your door and walls. Oh and they are ugly.

Project Requirements

I always start a project with my requirements. That will help me come up with a design and some constraints.

- Solidly supports my weight for pullups

- Doesn’t block the doorway

- Tall enough for me to hang from without my feet touching the ground

- Not obtrusive / isn’t obviously visible

Supplies

- 3/4” Plywood (I used Baltic Birch)

- 3/4” Galvanized Steel Pipe

- Long Wood Screws

- Tung Oil (or whatever finish you want)

Required Equipment

- Jigsaw

- Drill

- Hole Saw

- Studfinder

- Hack Saw

- Sandpaper

- Measuring Tape

- Level

- File

Design

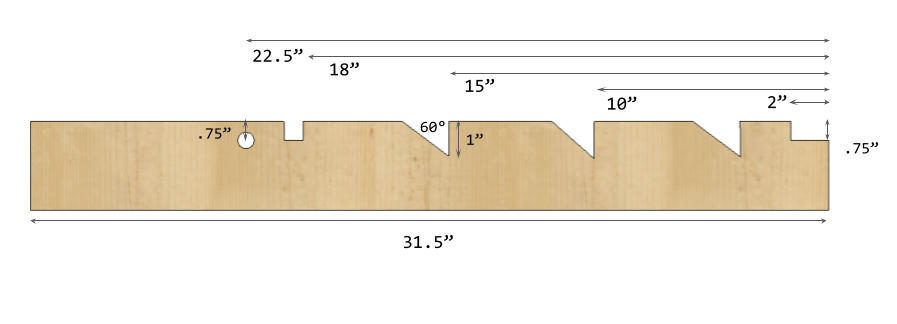

For the pull-up bar design, I’ve decided to mount two boards on either side of a hallway with a metal bar that sit in a slot just using gravity. The bar can sit at different heights and positions and and can be removed and replaced if need be. I decided to go wit baltic birch because it looks clean and minimal.

Steps

-

Decide where you’re gonna want the pull-up bar. Make sure it’s somewhere where you have enough head clearance to do a pull-up. Mark off where the studs are using the studfinder.

-

Based on where the studs are relative to the wall you can figure out how long the boards need to be to hit two studs. For me there were studs at about 14” inches from the doorframe, so I made my wooden brackets 17” inches long so there was some meat between the supported part of the board and the edge.

-

Mark the boards out and cut them using a jigsaw, tablesaw, chopsaw or whatever you have.

-

Use the hole saw with the drill to remove the hole from where the pull-up bar will go.

-

Then use the jigsaw to cut a slot from the board edge to the hole.

-

Sand everything nice and smooth.

-

After making sure to remove any sawdust residue, wipe the tung oil on in a thin layer and let dry.

-

Repeat Step 7 twice more to get a thicker finish.

-

Attach the boards into the wall making sure everything is level across the hallway. Use at least three screws in a stud. Please make sure everything is good and secure.

-

Using the hacksaw, cut the steel pipe so it just fits between your walls. This will ensure as much pipe as possible is supported by the wood brackets. File the pipe down on the ends to remove any burrs in the steel.

-

Put your steel pipe up between the two boards and you have your pull-up bar!

There you have it

If you have a narrow enough hallway you too can have a custom pullup bar. I’m really happy with this design. It was inexpensive, it’s out of the way and it meets all my design requirements.

Most importantly, it holds my weight just fine!

And there it is, a custom, hallway pull-up bar. Thanks for reading.

[

acapella

singing

arrangements

music

]

04 Jan 2019

Since 2007, I’ve been creating multi-voice arrangements for the a cappella groups that I’ve been involved in. All of these arrangements were created by me (sometimes with the help of friends) for non-commercial purposes. These songs have been sung by Take it SLO in San Luis Obispo, California and Seatown Sound in Seattle, Washington. Please feel free to download, alter, and reproduce my works. If you would be so kind as to credit the original artist and myself that would be just peachy.

I have in the past done works for other groups with a small commission. If interested, please contact me.

*Still in current repetoire

Everyday a cappella

(As if there was anything but)

Holiday Songs

[

flask

data-science

api

jinja-templating

]

01 Dec 2018

This post is modeled off a workshop I gave to the Seattle Building Intelligent Applications Meetup.

You can fork the code here Github Repo

What is Flask?

Flask is a web framework for Python. In other words, it’s a way to use python to create websites, web apps and APIs.

Create your Flask Environment

I will be showing how to use flask with python 3 but python 2 should work just as well.

-

Install virtualenv

pip3 install virtualenv

-

Set up the virtual environment

virtualenv -p python3 venv3

-

Activate the virtual environment

source venv3/bin/activate

-

Install the needed packages

pip install -r requirements.txt

Flask App Organization

Flask has some basic requirements when it comes to file and folder structure.

# the most basic flask app

yourapp/

|

| - app.py

Here’s an example of a more complex folder structure you’d see if you were serving a more complete website.

# typical app structure

yourapp/

|

| - app.py

| - static/

| - css/

| - resources/

| - js/

| - templates/

| - index.html

| - data/

Let’s build a basic flask app

-

From the root directory, open your app.py file, import flask and create your flask object.

from flask import Flask

# create the flask object

app = Flask(__name__)

-

Add your first route. Routes connect web pages to unique python functions. In this example, the root page of the site, yoursite/, should trigger the home() function and return ‘Hello World!’ as the server response to the webpage.

# routes go here

@app.route('/')

def home():

return 'Hello World!'

-

Now just add some code at the bottom to tell python what to do when the script is run.

# script initialization

if __name__ == '__main__':

app.run(debug=True)

Note: debug=True allows for quicker development since you don’t have to keep restarting your app when you change it.

-

Run python app.py

-

Check it out!

Your Locally Running App

-

Let’s add another route. Rather than just pure text, let’s return some HTML.

@app.route('/jazzhands')

def jazzhands():

return "<h1>Here's some <i>Pizzazz!</i></h1>"

-

One final route in our basic app. This route will use what’s known as dynamic routing. These allow more flexibility with your urls and the ability to pass variables straight through the url. Variables must be passed in between angled brackets ‘<>’ after the root of the url, in this case /twice/.

@app.route('/twice/<int:x>') # int says the expected data type

def twice(x):

output = 2 * x

return 'Two times {} is {}'.format(x, output)

Now let’s do some Data Science

We will be building a survival classifier using the Titanic Survival Dataset. Our goal is to create an interface for a user to make and view predictions.

The code to read in the data, split it up and train the model has already been written for you. We’re going to focus on how to implement the model and predict with it through the flask web interface.

Note: If you want more info on models for this dataset take a look here. Titanic Dataset Modeling

Install and import additional packages

-

If you haven’t already done so, make sure you have all the required packages in your virtualenv.

pip install pandas sklearn scipy

// or

pip install -r requirements.txt

-

Import the libraries required for modeling. render_template and request are needed for us to get data from the web interface to the flask app and then to present the results in a more visually appealing way than basic text.

from flask import Flask # you already should have this

from flask import render_template, request

# modeling packages

import pandas as pd

import numpy as np

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split

from sklearn.metrics import classification_report

-

Build the model: The following code will read in the titanic_data.csv, clean it up, select the pertinent features, split the data into test and training sets and then train a simple logistic regression model to predict the probability of survival. Paste this code right at the start of the script initialization. The model will be available in the namespace of the flask app.

# read in data and clean the gender column

if __name__ == '__main__':

# build a basic model for titanic survival

titanic_df = pd.read_csv('data/titanic_data.csv')

titanic_df['sex_binary'] = titanic_df['sex'].map({'female': 1,

'male': 0})

# choose our features and create test and train sets

features = ['pclass', 'age', 'sibsp', 'parch',

'fare', 'sex_binary', 'survived']

train_df, test_df = train_test_split(titanic_df)

train_df = train_df[features].dropna()

test_df = test_df[features].dropna()

features.remove('survived')

X_train = train_df[features]

y_train = train_df['survived']

X_test = test_df[features]

y_test = test_df['survived']

# fit the model

L1_logistic = LogisticRegression(C=1.0, penalty='l1')

L1_logistic.fit(X_train, y_train)

# check the performance

target_names = ['Died', 'Survived']

y_pred = L1_logistic.predict(X_test)

print(classification_report(y_test, y_pred,

target_names=target_names))

# start the app

app.run(debug=True)

-

Rerunning the script now should show us the classification_report from the logistic regression model in the terminal. We haven’t hooked up any flask routes to the model however. Let’s change that.

Rendering HTML templates using Flask

-

I’ve created a basic HTML template where we can build a user interface for predicting with our amazing model.

@app.route('/titanic')

def titanic():

return render_template('titanic.html')

-

Go to the Titanic Template to see where you will be interfacing with the user.

Hooking it all up

Our model uses the following variables to predict whether someone would have survived the titanic:

- Ticket Class (categorical)

- Age (integer)

- Number of Siblings & Spouses (integer)

- Number of Children & Parents (integer)

- Ticket Fare (numerical)

- Gender (binary)

In order to hook up the web interface with the model we have to allow the user to input all of the required model parameters. The easiest way to do this is with a simple web form.

- In the

titanic.html file, add the following code. This creates our webform, labels the inputs so we can grab them later and implements the predict button that sends the form to the /titanic flask route.`

<!-- The web form goes here -->

<form action="/titanic" method="post" id="titanic_predict">

<div>

<label for="name">Ticket Class: 1, 2, or 3</label>

<input type="text" id="class" name="predict_class" value=1>

</div>

<div>

<label for="name">Age: 0 - 100 </label>

<input type="text" id="age" name="predict_age" value=25>

</div>

<div>

<label for="name"># Siblings and Spouses</label>

<input type="text" id="sibsp" name="predict_sibsp" value=1>

</div>

<div>

<label for="name"># Children and Parents</label>

<input type="text" id="parch" name="predict_parch" value=0>

</div>

<div>

<label for="name">Ticket Fare: 0 - 500 ($) </label>

<input type="text" id="fare" name="predict_fare" value=250>

</div>

<div>

<label for="name">Gender: M or F</label>

<input type="text" id="sex" name="predict_sex" value='F'>

</div>

</form>

<button class="btn" type="submit" form="titanic_predict" value="Submit">Predict</button>

When you go check out your page you should see the web form there for you. Press the predict button though and you’ll get an error saying that method isn’t allowed. Flask routes by default enable the ‘GET’ method but if we want to allow any additional functionality, such as submitting data to the flask server via a webform we’ll need to enable those explicitly.

-

Update the methods parameter to allow both ‘GET’ and ‘POST’ methods on the /titanic route.

@app.route('/titanic', methods=['GET','POST'])

def titanic():

return render_template('titanic.html')

-

Request the data from the web form inside the flask function.

def titanic():

data = {}

if request.form:

# get the form data

form_data = request.form

data['form'] = form_data

predict_class = float(form_data['predict_class'])

predict_age = float(form_data['predict_age'])

predict_sibsp = float(form_data['predict_sibsp'])

predict_parch = float(form_data['predict_parch'])

predict_fare = float(form_data['predict_fare'])

predict_sex = form_data['predict_sex']

print(data)

return render_template('titanic.html')

- Test the connection from the web page to the flask function using the predict button to make sure data is being passed from the web form by printing it to the terminal. We will be feeding this data into our model to make our predictions.

11a. Convert the predict_sex variable from a string into binary (F = 0, M = 1).

11b. Build the numpy array of values input_data to pass into the model. The order DOES matter.

11c. Call the predict_proba() method on the logistic regression model with our input data.

11d. Grab the survival probability from the prediction and put it into the data dictionary to be passed back to the web page.

```python

def titanic():

data = {}

if request.form:

# get the form data

form_data = request.form

data['form'] = form_data

predict_class = float(form_data['predict_class'])

predict_age = float(form_data['predict_age'])

predict_sibsp = float(form_data['predict_sibsp'])

predict_parch = float(form_data['predict_parch'])

predict_fare = float(form_data['predict_fare'])

predict_sex = form_data['predict_sex']

# convert the sex from text to binary

if predict_sex == 'M':

sex = 0

else:

sex = 1

input_data = np.array([predict_class,

predict_age,

predict_sibsp,

predict_parch,

predict_fare,

sex])

# get prediction

prediction = L1_logistic.predict_proba(input_data.reshape(1, -1))

prediction = prediction[0][1] # probability of survival

data['prediction'] = '{:.1f}% Chance of Survival'.format(prediction * 100)

return render_template('titanic.html', data=data)

```

Jinja Templating

We are now getting the input data from the form and predicting with it. Now we have to tell the client-side how to display the information. We will be using Jinja templating to control how data from the server is displayed.

-

Add the following to titanic.html. In Jinja, we access data objects inside double brackets, i.e. ``.

<div class="col-lg-6">

<!-- Result presentation goes here -->

</div>

Take a look at the /titanic page and you can see that all the bits and pieces are hooked up. Time to do just a bit of UI work. Jinja allows you to use python-like logic to control the HTML that is displayed.

-

In the titanic.html file, use a simple if statement to prevent displaying anything if a prediction is not present. Then add some HTML to structure what the user will see. Here we want to show the prediction and the input parameters they put into the model.

<div class="col-lg-6">

<!-- Result presentation goes here -->

</div>

That’s It! (we hope)

Fully Hooked Up Titanic Survival Model

At this point you should hopefully have a fully function flask-based web app that trains a model off real data and presents a simple interface to a user to allow them to make a prediction with the model and see the results.

More Resources